How do I set up the remote-mcp-chat client?

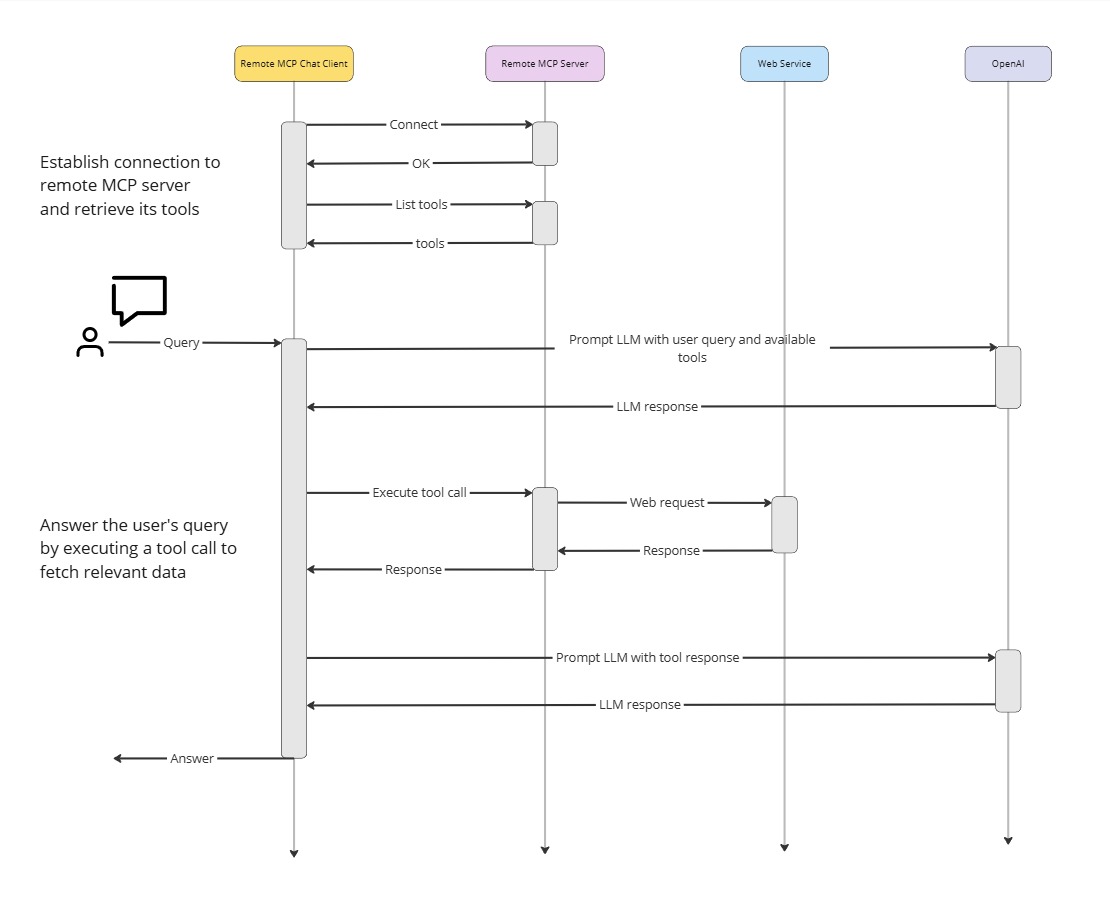

Create a .env file with your OpenAI API key and MCP server URL, set up a Python 3.10+ virtual environment, install dependencies, and run client.py.

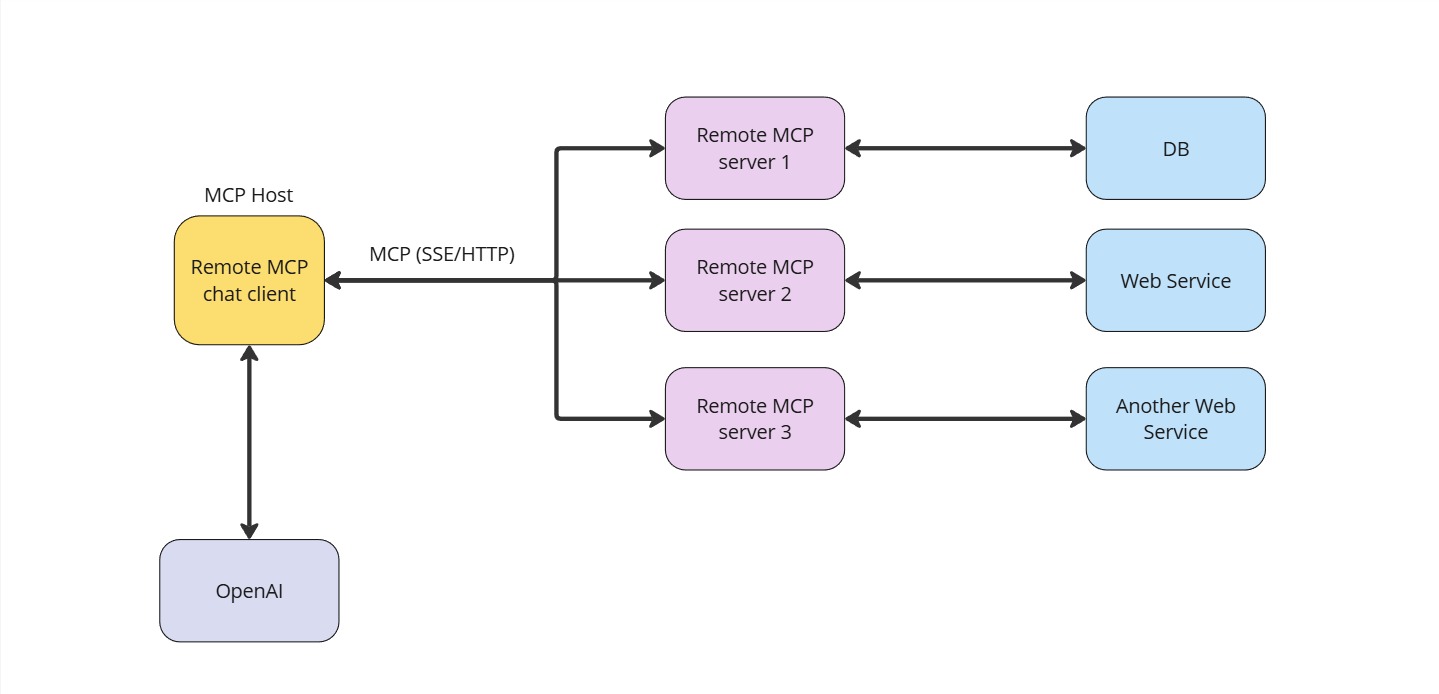

Can remote-mcp-chat connect to any MCP server?

Yes, it connects to any compliant remote MCP server via the specified URL in the .env configuration.

What LLM providers does remote-mcp-chat support?

It primarily uses OpenAI but can be configured to work with other providers like Claude and Gemini through the MCP server.

Is remote-mcp-chat suitable for production use?

It is designed as a simple client for development and testing; production use may require additional customization and security hardening.

What dependencies are required to run remote-mcp-chat?

Python 3.10+, uv for environment management, and Python packages listed in pyproject.toml.

How does remote-mcp-chat handle authentication?

Authentication is managed via API keys set in the .env file, typically for OpenAI and the MCP server endpoint.

Can I extend remote-mcp-chat with additional features?

Yes, since it is open source and Python-based, you can customize and extend it to fit your needs.

Does remote-mcp-chat support multi-turn conversations?

Yes, it supports interactive multi-turn chat sessions with remote MCP servers.