CF-MCP-Client is a Spring chatbot application that can be deployed to Cloud Foundry and consume platform AI services. It's built with Spring AI and leverages the Model Context Protocol (MCP) and memGPT to provide advanced capabilities:

- Java 21 or higher

- Maven 3.8+

- Access to a Cloud Foundry Foundation with the GenAI tile or other LLM services

- Developer access to your Cloud Foundry environment

- Build the application package:

mvn clean package- Push the application to Cloud Foundry:

cf push- Create a service instance that provides chat LLM capabilities:

cf create-service genai [plan-name] chat-llm- Bind the service to your application:

cf bind-service ai-tool-chat chat-llm- Restart your application to apply the binding:

cf restart ai-tool-chatNow your chatbot will use the LLM to respond to chat requests.

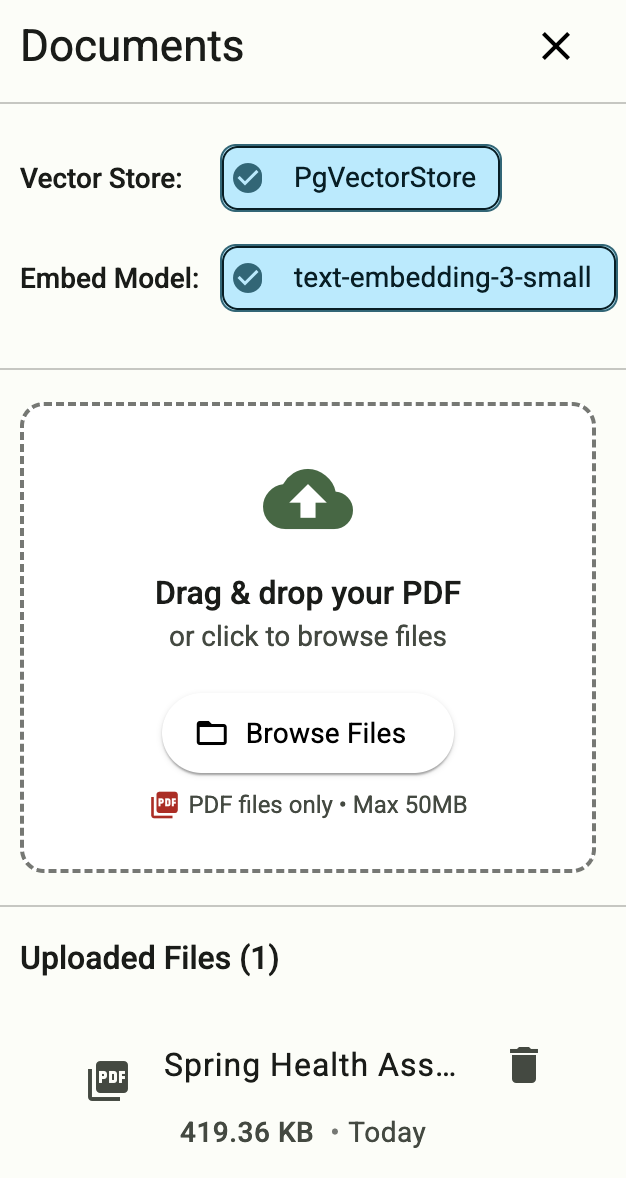

- Create a service instance that provides embedding LLM capabilities

cf create-service genai [plan-name] embedding-llm - Create a Postgres service instance to use as a vector database

cf create-service postgres on-demand-postgres-db vector-db- Bind the services to your application

cf bind-service ai-tool-chat embedding-llm

cf bind-service ai-tool-chat vector-db- Restart your application to apply the binding:

cf restart ai-tool-chatNow your chatbot will respond to queries about the uploaded document

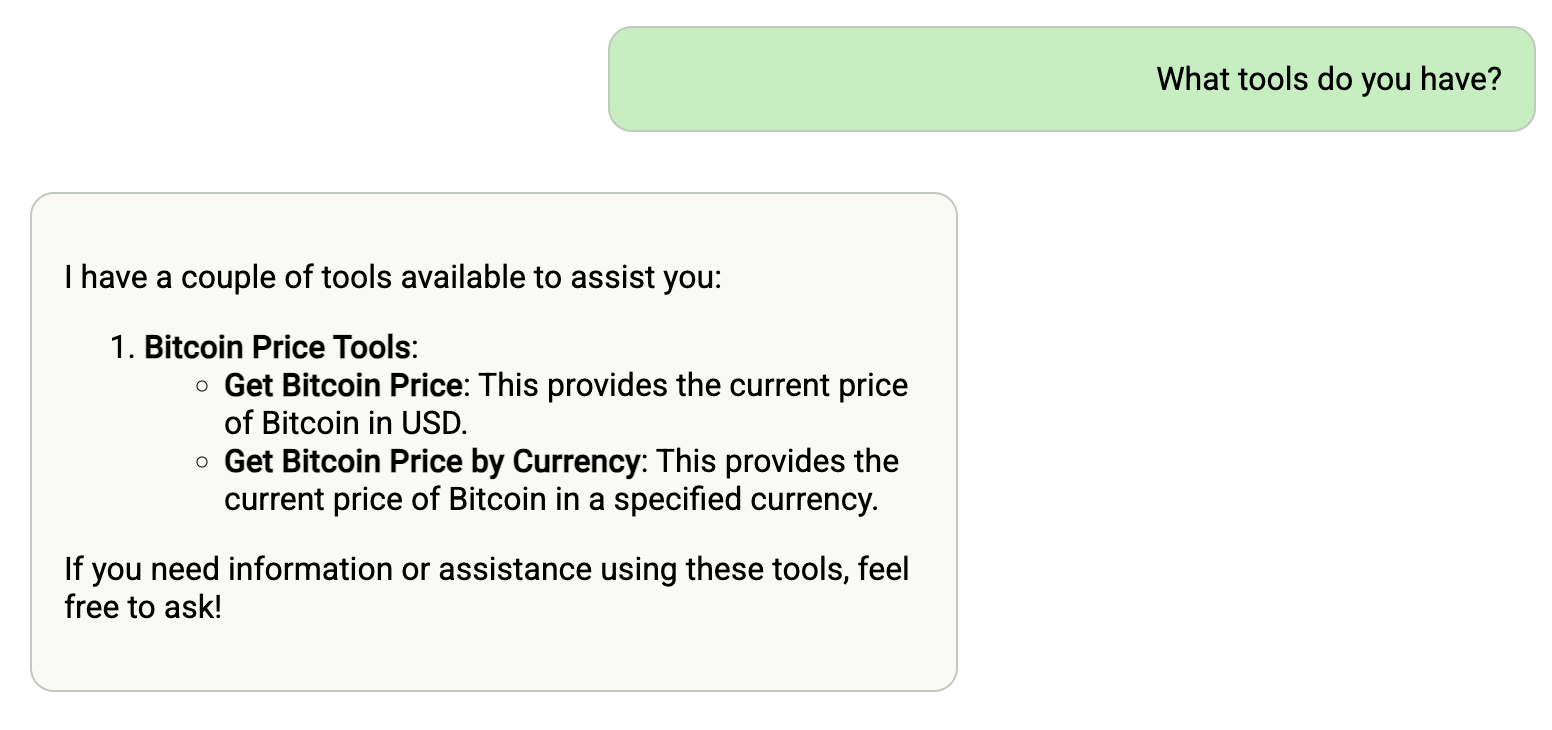

Model Context Protocol (MCP) servers are lightweight programs that expose specific capabilities to AI models through a standardized interface. These servers act as bridges between LLMs and external tools, data sources, or services, allowing your AI application to perform actions like searching databases, accessing files, or calling external APIs without complex custom integrations.

- Create a user-provided service that provides the URL for an existing MCP server:

cf cups mcp-server -p '{"mcpServiceURL":"https://your-mcp-server.example.com"}'- Bind the MCP service to your application:

cf bind-service ai-tool-chat mcp-server- Restart your application:

cf restart ai-tool-chatYour chatbot will now register with the MCP agent, and the LLM will be able to invoke the agent's capabilities when responding to chat requests.

If you have access to a compatible memGPT implementation service:

- Create a user-provided service for the memGPT service:

cf cups memGPT -p '{"memGPTUrl":"https://your-memgpt-service.example.com"}'- Bind the memGPT service to your application:

cf bind-service ai-tool-chat memGPT- Restart your application:

cf restart ai-tool-chat